Faster R-CNN: Overview

Contents

- 1. Introduction

- 2. Architecture of Faster R-CNN

- 3. Model Workflow

- 4. Advantages and Disadvantages

- 5. Training Faster R-CNN (Small Demo)

- 6. References

1. Introduction

Faster R-CNN is a state-of-the-art object detection model that combines a Region Proposal Network (RPN) with a detection network to create a fast and accurate detection system. Unlike earlier methods that use manual region proposal algorithms (like Selective Search), Faster R-CNN employs a fully convolutional RPN to directly generate region proposals from image features. The model shares convolutional layers between the RPN and the detection network, significantly reducing computation costs.

The model consists of two stages:

- Stage 1: The RPN proposes candidate regions likely to contain objects.

- Stage 2: The classification and regression network uses these proposals to classify objects and refine bounding boxes.

2. Architecture of Faster R-CNN

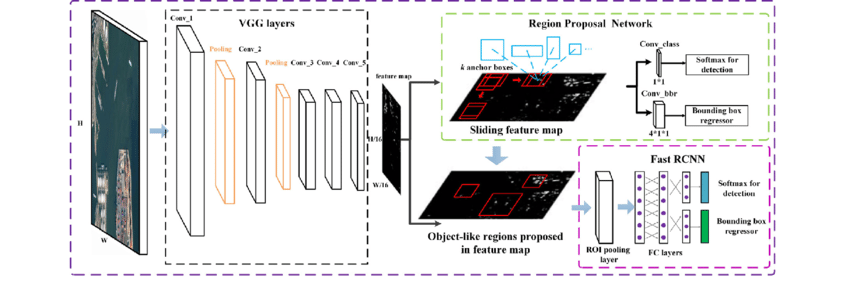

Faster R-CNN model architecture

Faster R-CNN model architecture

2.1 CNN Backbone

The CNN backbone is the main convolutional network used to extract features from the input image. Popular networks like VGG16 or ResNet are used to produce feature maps. These feature maps are shared between the RPN and the final classification network, saving computation.

2.2 Region Proposal Network (RPN)

The RPN replaces manual region proposal methods such as Selective Search. It is a small convolutional network that operates on the feature maps and generates region proposals. At each location on the feature map, multiple “anchor boxes” of different scales and aspect ratios are created. The RPN predicts:

- An objectness score (likelihood of containing an object)

- Bounding box coordinates adjustments (bounding box regression)

2.3 RoI Pooling & Classification

Region proposals from the RPN have varying sizes, so RoI Pooling is used to normalize them to a fixed size (e.g., 7×7). Then these regions are passed through fully connected layers to:

- Classify the object class within the region

- Refine the bounding box coordinates further (bounding box regression)

3. Model Workflow

🔹 Stage 1: Region Proposal (RPN)

- Input image is passed through the CNN backbone (VGG16, ResNet, etc.) to generate a feature map.

- RPN operates on the feature map to generate region proposals — areas likely to contain objects, along with confidence scores and coordinates.

🔹 Stage 2: Object Detection

- Region proposals are used to extract corresponding regions from the feature map, then normalized with RoI Pooling.

- These regions are fed into the classification and regression network:

- Predict the class label of the object

- Refine the bounding box

- The final output is a list of bounding boxes with object classes and confidence scores.

4. Advantages and Disadvantages

✅ Advantages

- Faster than previous methods (e.g., R-CNN, Fast R-CNN) due to integrated RPN and shared feature maps.

- High accuracy in object detection.

- End-to-end training: easy to optimize the whole model jointly.

- Flexible: easy to swap backbones (VGG, ResNet…) or incorporate new improvements.

❌ Disadvantages

- Not real-time on CPU, needs GPU for faster speed (~5fps with VGG-16).

- More complex to implement compared to one-stage detectors like YOLO or SSD.

- Not optimized for mobile devices due to large model size.

5. Training Faster R-CNN (Small Demo)

Download source code

6. References

[1] S. Ren, K. He, R. Girshick, J. Sun. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. arXiv:1506.01497v3 [cs.CV], 2016. 🔗 https://arxiv.org/abs/1506.01497